All published articles of this journal are available on ScienceDirect.

Exploring the Perceived Social Support (PSS) of ChatGPT among Female Migrants in the Gulf Countries: A Qualitative Analysis

Abstract

Introduction

Social support can positively affect individuals’ well-being, mental health, and perceived quality of life. When migrants leave their country of origin, they might lose their strong social networks and supporting ties. As reshaping social networks after migration might be challenging, this study aims to examine female migrants’ perceptions of ChatGPT’s potential to serve as a source of social support in four dimensions: emotional, instrumental, informational, and appraisal.

Methods

Employing a qualitative research method, we conducted online semi-structured interviews with 64 female migrants working in the Gulf countries for less than a year. We recruited 300 educated, working female migrants in the Gulf countries through LinkedIn. A total of 64 volunteers agreed to participate in the interviews. They were familiar with ChatGPT-3.5 and had used its free version to receive social support for at least two weeks before the interviews. We used a qualitative framework analysis to examine participants' perspectives, applying thematic coding to highlight important aspects of perceived social support. Two researchers independently coded the data to ensure the analysis was thorough, and peer debriefing was carried out to validate the results.

Results

The study found that ChatGPT offers potential as an emotional, instrumental, informative, and appraisal support source, but it lacks genuine human warmth and authentic interaction. While it is beneficial for professional tasks, skill development, and access to accurate information, its feedback often lacks depth for deep, personalized reasoning. The findings suggest the need for future AI improvements to overcome these flaws and improve its completeness and efficiency in providing help.

Discussion

This study investigates the use of ChatGPT 3.5 in providing social support to working female migrants in the Gulf region. Through interviews with 64 educated migrants, it was found that ChatGPT could offer emotional support related to love, trust, and empathy, but cannot replace human interaction due to its lack of human-like features.

Conclusion

The study concluded that ChatGPT offered valuable emotional, instrumental, informational, and appraisal support for female migrants.

1. INTRODUCTION

Human life is shaped by one's presence in society and interaction with other people, not in isolation. People rely on each other’s support in the community to grow and progress in life, such as financial or social support. Expectations, perceptions, and experiences of individuals regarding the support they receive can significantly shape their overall well-being and quality of life. Social support is a vital component of every community and society. Perceived Social Support (PSS) refers to how people feel about the presence and availability of close friends or family, or any supportive entities, during challenging moments. Gender, cultural background, and ethnicity can shape how individuals experience social support, as the individuals' needs and expectations around seeking help can be different [1]. Several studies have also examined its critical role in psychological outcomes, mental health, and well-being, as well as in increasing individuals’ coping with various stressors [2-5].

Many factors might affect individuals' decisions on international or international migration, and adapting to a new environment can be challenging. Many studies have examined the importance of social support in assisting migrant populations in the new chapter of their life [6-8]. There are several reasons for the high focus of studies in this area. Firstly, integrating into a new country involves significant socioeconomic and cultural shifts, requiring migrants to seek various informational, financial, and language support for smoother integration [9, 10]. Secondly, migrants may experience mental problems [11, 12] and go through different hardships such as social isolation, bigotry, or lack of emotional support [9, 13, 14]. Bots can significantly address such concerns [15]. An example of well-being improvement was reported by immigrants based in Australia who experienced less discrimination because of social support [16]. Studies suggest that a variety of problems, such as job stress, low self-confidence, and depression, can be addressed [17]. The absence of PSS strengthens the attachment of migrants' identity to their country and affects their mental well-being [18].

Migration poses more challenges for women as they have to manage different stressors, including household chores and children’s upbringing in the absence of their family members [19]. Receiving social support helps migrant mothers better cope with the stress and decrease the risk of depression in the workplace [20, 21]. This issue is important because women are at higher risk of mental health issues compared to their male counterparts because they have fewer chances for integration into society [22].

Available studies on PSS have been conducted on interpersonal support, where human agents offer social support [23]. Considering the development of technology since 1996, chatbots have been introduced to address distress and isolation [24]. In the absence of social support, chatbots can be used as companions [25]. Equipped with features such as empathetic language, they can serve as powerful tools that help individuals overcome negative feelings and social isolation [26].

Built on the transformational GPT architecture and pre-trained on large datasets, in November 2022, the development and accessibility of ChatGPT opened a new horizon in human-computer interaction. The bot offers contextually aware, creative discussions that go well beyond basic pattern matching due to a considerable advancement over early chatbots. A growing number of studies since then have examined the potential of ChatGPT in various fields, for instance, education and mental health [27-30]. Different governmental and non-governmental organizations across the world have started using a variety of chatbots to provide psychological support for migrants. Surprisingly, little is known about the potential of ChatGPT for social support. Targeting educated working females in the Gulf countries, the current study aims to examine the perceived effectiveness of the bot in providing 4 types of social support, namely emotional, instrumental, informational, and appraisal. Filling a part of the gap in this area, the results of the study can provide a distinctive perspective for comprehending the dynamics of migration and social support in the modern world. This investigation is vital in gauging the extent to which AI, in its current state, can bridge the gap in social support for women facing the unique challenges of migration and resettlement.

2. LITERATURE REVIEW

2.1. Theoretical Framework for Perceived Social Support

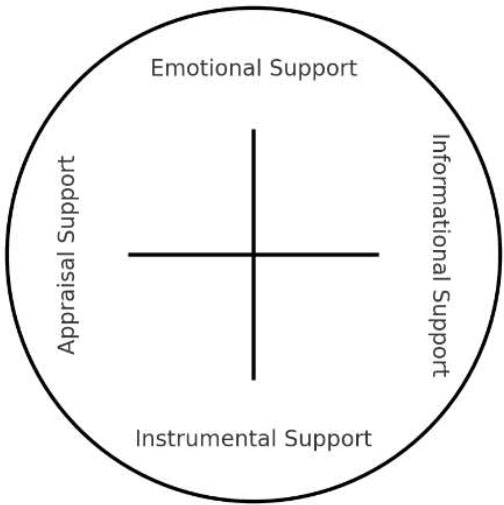

Since ChatGPT emerged, various studies have been conducted on the bot's potential and limitations in improving human life. Ha et al. proposed a framework for PSS to present social relationships and networks. This framework encompasses 4 types of support (Fig. 1), namely emotional (love, trust, empathy, and care), instrumental (time, money, and physical assistance), informational, and appraisal (evaluative comments, either constructive criticism or affirmation) support [4, 31].

Dimensions of social support [4].

As ChatGPT employs a Large Language Model (LLM), it can interact and provide emotionally supportive responses with an empathetic or encouraging tone. What makes such support important is the overwhelming feeling of isolation and avoidance of seeking any assistance in cases of need [32]. Such concerns can be addressed by chatbots that are equipped with great emotional awareness [32]. Apart from that, in comparison to humans, chatbots have won a rave review for their nonjudgmental abilities in providing emotional support ([33], which is well documented in healthcare sectors [34]. Nonjudgmental listening and the possibility of personalized interactions can enhance the emotional well-being of Generation Z (Gen Z), a demographic deeply engaged in digital interactions [35]. ChatGPT might moderately reduce the perception of social isolation among the elderly [36]. Moreover, engaging with ChatGPT might reduce perceived loneliness [37] by enhancing individuals' sense of belonging and social support. Self-help chatbots, such as ChatGPT, can offer emotional support to refugees and Asylum seekers and help them deal with stress caused by displacement, transition, as well as social challenges [38]. However, some evidence suggests that ChatGPT can provide elementary emotional reinforcement but cannot substitute for professional psychological support in emotional trauma, such as surgery [39].

ChatGPT can provide quick solutions for various problems that people might encounter in various contexts, such as education, learning, and professional work [40, 41]. Practical help or the instrumental support that ChatGPT offers can help individuals achieve their goals [4]. Different companies are integrating ChatGPT into their workflows to improve collaboration and automate repetitive tasks. Task automation that lets teachers create lesson plans makes teaching material more efficient. Beginner special education teachers, for example, have indicated that ChatGPT helps them create IEP (Individualized Education Program) goals appropriately and can simplify the planning steps in educational activities as well [42]. Moreover, ChatGPT is widely recognized as one of the AI-based chatbots that provide informational support across various fields, including health, self-care, and education [43].

Appraisal support provided by ChatGPT can offer new opportunities to enhance evaluative feedback methods. As an AI conversational agent, ChatGPT is capable of delivering both criticism and affirmation, potentially adding value to the appraisal process in nuanced ways. For example, one case highlights the benefits of ChatGPT in assisting early-career academics [44]. It describes how one-on-one (or chat-to-chat) interactions with ChatGPT have supported their professional development by providing valuable feedback. Additionally, ChatGPT is said to adjust its responses based on the feedback it receives, responding positively to praise and reevaluating its outputs when met with criticism [45]. This adaptability is essential for creating a balanced assessment environment where users feel both encouraged and constructively guided. Moreover, the use of AI for performance evaluation extends beyond educational settings. A comprehensive study on user intentions to adopt AI for various purposes revealed a high level of acceptance for AI in evaluative roles [46].

2.2. ChatGPT and Social Support for Migrants

The research on the role of ChatGPT in providing social support for migrants requires further development. To the best of the authors’ knowledge, no study has examined if and how AI can provide emotional, instrumental, informational, and appraisal support for migrant women. Despite its importance, the majority of previous studies have concentrated on the local vulnerable populations, such as young people and the elderly, while little attention has been given to migrants [23, 36]. For instance, in a study that was carried out on the elderly, it was found that AI-based conversational agents can play a huge role in providing social support and decreasing the sense of loneliness [36].

Few studies have focused on the potential of AI to provide emotional support to refugees who may experience isolation, culture shock, or discrimination in host countries. Some research has also explored ChatGPT’s role in offering instrumental support, such as learning assistance, for migrants. For example, a recent study examined how ChatGPT could support second-generation migrant students with learning disabilities in English for academic purposes [47]. The study highlighted both the potential benefits and limitations of using ChatGPT. Its availability and ability to offer rapid academic assistance in a non-judgmental manner were seen as key drivers of its use. However, another study found that over-reliance on ChatGPT in academic contexts discouraged its adoption among some migrants. The researchers noted that language barriers and unfamiliarity with healthcare systems in the host country posed significant challenges [48].

Additional studies have shown that ChatGPT can provide informational support on job safety for migrant workers [49], function as a counselor to ease the job search process [50], and even assist in cultural assimilation and integration [51]. Furthermore, it may help facilitate communication between refugees and host residents through its translation capabilities.

In terms of social support, researchers have found that chatbots can encourage users to reflect on feedback and help them gain fresh perspectives [23, 52]. Some studies have also explored the role of AI in enhancing case management for vulnerable migrants [53]. By automating routine tasks, improving service delivery, and enabling personalized support, ChatGPT may help overcome traditional barriers in migrant support systems.

Direct evidence examining ChatGPT’s role in providing evaluative feedback specifically to migrants is limited. However, ChatGPT has been reported to serve as a platform for addressing refugee health challenges. Studies have examined the effectiveness of the bot in offering formative feedback, for example, on students' writing [54]. Research on ChatGPT’s role in supporting migrants remains scarce, and the current study contributes to filling this gap by focusing on female migrants’ perceived effectiveness of ChatGPT.

3. METHODOLOGY

3.1. Research Design

In general, due to a scarcity of studies examining how ChatGPT provides social support for female migrants, we selected a qualitative approach to achieve an in-depth understanding of how this unheard group of people perceives ChatGPT as a source of social support. We did not aim to generalize the results but to explore the experience of these women relying on ChatGPT as a companion for emotional, instrumental, informational, and appraisal support. The ethical approval for conducting the research was obtained from Dhofar University, Sultanate of Oman.

3.1.1. Population and Sampling Technique

In this study, we examined educated, working female migrants residing in Gulf countries. Participants were purposively selected based on the following inclusion criteria: (a) being female migrants currently residing and working in any of the Gulf countries (e.g., Bahrain, Kuwait, Oman, Qatar, Saudi Arabia, or the United Arab Emirates); (b) the ability to interact with ChatGPT in English; (c) holding a bachelor’s degree or higher; (d) being currently employed in a professional capacity in the Gulf; and (e) having consistent and meaningful experience using ChatGPT.

There were several reasons for targeting this population. Firstly, previous studies suggest that interpersonal social support needs, experiences, and received support might be different between men and women [55]. Evidence from a large body of past research indicates that female migrants across host countries often experience disadvantaged circumstances relative to their male counterparts [56]. Women are known to be more frequent support seekers and value emotional or relational health [57]. In addition, working female migrants may face unique challenges in balancing household chores and workplace demands, which makes them more vulnerable to migration. Additionally, it was practical to highlight educated, English-proficient women migrants, as participants could be found through professional platforms, and we used English as the mode of their interaction with ChatGPT. This way, we attempted to minimize misinterpretation that could be caused by language barriers and guarantee that responses reflect the constructs being investigated.

As educated people are more likely to deal with technologies, we decided to recruit participants through LinkedIn, a professional networking platform that was accessible to English-proficient and educated females in the Gulf region [58]. The profile information of participants who met the above-mentioned criteria was verified by checking their education, job title, and workplaces. By selecting the above-mentioned criteria, we attempted to identify cases that provide us with rich and in-depth data. Table 1 presents the participants’ demographic backgrounds.

| Category | Subcategory | Number (N) | Percentage (%) |

|---|---|---|---|

| Country of Residence | Bahrain | 8 | 12.5% |

| Kuwait | 10 | 15.6% | |

| Oman | 7 | 10.9% | |

| Qatar | 9 | 14.1% | |

| Saudi Arabia | 12 | 18.8% | |

| United Arab Emirates | 18 | 28.1% | |

| Age | 20-30 | 28 | 43.8% |

| 31-40 | 22 | 34.4% | |

| 41-50 | 14 | 21.9% | |

| Marital Status | Single | 27 | 42.2% |

| Married | 32 | 50.0% | |

| Divorced | 5 | 7.8% | |

| Having Children | Yes | 36 | 56.3% |

| No | 28 | 43.8% | |

| Length of Migration (Less than 12 months) | 1-6 months | 38 | 59.4% |

| 7-12 months | 26 | 40.6% |

3.2.2. Instruments

The semi-structured interview guide was designed to explore participants' perceptions of ChatGPT's ability to provide social support, with a focus on the four commonly recognized dimensions of perceived social support: emotional, instrumental, informational, and appraisal. The guide allowed participants to elaborate on their experiences while ensuring that key elements were addressed in most interviews. “Example questions from the interview guide included:

3.2.2.1. Emotional Support

Does ChatGPT give you a sense of emotional comfort and understand your feelings? What are the reasons that justify using ChatGPT? Have you ever trusted to share your unique emotions with it? Explain why?

3.2.2.2. Instrumental Support

Have you relied on ChatGPT to help resolve personal or professional problems? How effective has it been?

3.2.2.3. Informational Support

Do you use Chat GPT to receive your required information? How do you evaluate the information? How has Chat GPT met your needs? In which aspects has Chat GPT failed to satisfy your needs?

3.2.2.4. Appraisal Support

Has ChatGPT just been nice?· If there are any examples, please provide them. It maintained a thematic structure that kept the interviews on track while allowing the participants to step out and share detailed and appropriately personalized experiences.

If there are any examples, please provide them. It maintained a thematic structure that kept the interviews on track while allowing the participants to step out and share detailed and appropriately personalized experiences.

3.2.3. Procedure

Data collection occurred from September to November 2023. A total of 300 potential respondents were contacted through LinkedIn, targeting 50 respondents from each of the six Gulf countries. Overall, 64 female migrants working in the Gulf countries agreed to participate in the interviews. All participants agreed with informed consent before participation in the study, and they were told that their participation was voluntary and withdrawal was allowed at any time without any implications. They were assured of their data confidentiality. They were asked to use ChatGPT 3.5, which is free and easily accessible for two weeks. However, all of the respondents were familiar with this AI bot and were using that frequently.

Interviews for each participant were scheduled through Google Meet. Despite their experience in using ChatGPT, some general guidance was provided about the usage and user experience of ChatGPT, for example, how to start conversations and explore various types of support (emotional vs. informational, etc.) and how to use the platform. The four types of social support (emotional, instrumental, informational, and appraisal) were explained to the respondents to be sure that we and the participants had the same understanding of the construct being investigated. However, we refrained from providing detailed examples to avoid influencing the participants’ responses.

The participants were asked to interact with ChatGPT as often and extensively as possible for any type of social support. After two weeks of engaging with ChatGPT, semi-structured interviews were conducted with the participants to delve deeper into the participants’ experiences and perceptions of interactions with ChatGPT. The duration of each interview was between 15 to 45 minutes.

3.2.4. Data Analysis

We employed framework analysis to develop the themes systematically by constantly comparing and contrasting the qualitative data. We performed a deductive analysis to identify and investigate patterns within the data. We categorized the data based on emotional, instrumental, informational, and appraisal support. Grounded theory was considered to be inappropriate for this research since our purpose was to analyze participant experiences through the lens of existing dimensions of perceived social support rather than to develop a new theory.

For data analysis, audio recordings of the interviews were made, anonymized, and then transcribed. The framework analysis method was used to examine the interview transcripts. The material was thoroughly read to become familiar with the subject matter, and pertinent passages related to the research questions were underlined. Four categories of social support and their sub-themes served as the foundation for the development of a thematic framework. Within the framework of the specified dimensions, the text was coded and indexed. We reached data saturation when additional interviews did not provide us with any new information. Repetitive themes showed the topic was examined thoroughly.

Aligned with the suggestion of a previous study for an objective interpretation of the findings, the interview transcripts were examined independently by two coders to guarantee the analysis's dependability [59]. It was through this constant ‘coding-re-coding’ process that the integrity of our research was maintained and, in one way or another, echoed what participants experienced. Initial coding results were compared, and any discrepancies were discussed in detail during reconciliation meetings. The discussions were aimed at reaching a consensus on the most accurate and representative coding for each segment of text. In cases where disagreements persisted, a third researcher was consulted to mediate and provide an objective perspective.

Moreover, the participants were sent individual texts to review and verify the interview transcripts as well as preliminary findings. The member checking was done to ensure that the interpretations made from the data were truly rooted in the participants’ experience. By engaging the participants of the study in the authentication process, we enhanced the trustworthiness of the results. Based on the feedback received from the participants, we refined interpretations to better capture their perspectives.

Two researchers who did not participate in the process of data collection and coding data were peer-debriefed about the process and asked to audit the data. This one was another layer of validation. For example, through the sessions, we gathered valuable feedback on overlapping codes such as ‘instrumental support’ and ‘informational support’ and scrutinized additional examples from the participants to address any discrepancies in coding. While doing this, we attempted to address bias and enhance the credibility of the results, which were anchored on data.

4. RESULTS

Though we adopted the deductive approach for the data analysis and sought patterns in the PSS framework, we included the emergent themes on the limitations of social support in the relationships between female migrants and ChatGPT. Table 2 presents a summary of the findings.

| Theme | Positive Aspects | Limitations |

|---|---|---|

| Emotional Support | - Providing a sense of trust and privacy for introverts. - Using empathetic language gives a sense of comfort. - Safe space for sharing feelings |

- Lack of genuine human warmth - Generic, robotic responses. - Limited ability to replicate human-like interaction. |

| Instrumental Support | - A in professional tasks (e.g., emails, research). - Available 24/7 - Contributing to financial improvement - Providing a low-cost alternative to therapy |

- None explicitly noted in findings. |

| Informational Support | - Helping in career transitions and skill development - Striking balances between work and personal life. - Providing quick, accurate health-related information |

- None explicitly noted in findings. |

| Appraisal Support | - Offering logical and detailed feedback for decision-making - Encouraging evaluation of different perspectives |

- Lack of solid, decisive answers. - Limited to logical reasoning without deep personalization. |

4.1. Theme 1: Emotional Support

Emotional support is an important human need. It is about the provision of love, empathy, trust, and care. Theme 1 examines women migrants’ opinions on how ChatGPT can fulfill such crucial needs. This theme explores both the positive aspects and limitations of the emotional support provided by ChatGPT based on user experiences.

4.1.1. Emotional support: Positive Aspects

4.1.1.1. Love

Love has a complex meaning and can be perceived differently by each individual. One interviewee mentioned the feeling of being loved by the bot. Rahma, who works at an accounting company, often shared her thoughts and worries over coffee with her sister or close friends when she was in Tunisia. However, living far from home, she frequently had questions she did not want to share with her colleagues. Even after living in Dubai for over six months, she had yet to find close friends as she had before migrating. She described how she used ChatGPT to receive emotional support during this time.

“When lying down on the bed, I ask ChatGPT questions. I end up sharing my experience and telling stories about what happened to me during the day. Even if I had a conflict with a colleague, I used to write it down. I feel like this little robot loves me!”

Rahma described how she shared her daily story with the bot and the feeling of being loved by the ChatGPT.

4.1.1.2. Trust

ChatGPT can provide social support if people find it trustworthy enough to start a conversation. Somayeh, an IT engineer working in a medium-sized company, considered herself an introvert with a limited number of close friends. She found a sense of empowerment through her interactions with ChatGPT.

“For me, it is not easy to express my feelings openly to others. I found it to be easy to ask my private questions, the ones that disturbed my mind from ChatGPT. Even though I think people do not want to hear about our negative feelings, I felt comfortable telling ChatGPT I had a terrible day, I felt like a crazy person, and I felt so bad. When ChatGPT continued asking me how she could assist me, and the conversation was continuing without intervention, I trusted her to give more personal details.”

Somayyeh felt insecure about sharing her thoughts and feelings with others, but this apprehension was absent when communicating with ChatGPT. The interviewee felt more open to discussing her emotions, problems, and daily experiences. She found a level of comfort and safety in conversing with ChatGPT, which enabled her to express herself more openly and feel empowered in her communication.

4.1.1.3. Empathy

One of the interviewees was in awe of ChatGPT. She found a certain level of comfort in the interaction with ChatGPT. She appreciated ChatGPT’s use of empathetic language.

The level of engagement and emotional support that was provided served as a positive distraction and helped her get through work stress and anxiety on her worst days.

‘There were days when I was getting super overwhelmed at work while panicking about all my deadlines. I was also using ChatGPT for work then. During my break, I decided to write about why I was feeling stressed and anxious. ChatGPT responded to me in such positive and empathetic language, which was very comforting”.

4.2.1. Emotional Support Limitations

4.2.1.1. Love

Through several interviews we conducted, it was clear that ChatGPT was found to have some potential as emotional support, but it was not comparable with the love respondents received from close friends or family members. Some participants saw ChatGPT as uneven in comparison to face-to-face communication. Sara is an assistant architect from India who works in Oman. She prefers to spend time with a real person in a real place rather than chatting with ChatGPT.

“Sometimes, you need to sit with your best friend by the beach, drink a coffee, and talk. When I was chatting with the bot, I found it boring and useless. It was like attending a boring lecture.”

The above statement indicates a yearning for real interaction and closeness that ChatGPT cannot supply. The usage of the phrases boring and useless by Sara, as mentioned above, characterizes her experience using ChatGPT and demonstrates a perceived lack of interest and value in the conversation with the bot. The analogy to attending a lecture underlines this idea even more, as she compares this conversation to attending a boring lecture.

4.2.1.2. Empathy

Salma, a Sudanese lecturer in Oman, used ChatGPT as a companion a few times. She mentioned her dissatisfaction with its user interface, which she found to be overly robotic and mechanical, devoid of conversational flow when she sought emotional support.

“Ya, I used ChatGPT to get some comfort when I missed my family. But I couldn’t continue chatting with a boring, solid page to feel loved. I felt even more lonely when I found myself chatting with a robot, sitting in the living room alone. It’s been a while since my father’s death. I’ve always missed him. I miss seeing him or listening to his voice. When I feel overwhelmed at work, I wish I could get his advice on things. It would be incredible to see if there was an AI tool that could mimic him, his voice, and his behavior because that would change the whole experience for me. It wouldn’t be a robotic response, but rather a heartfelt response that I’ve been yearning for.”

Several important findings about human and computer interaction emerged in the interview with Salma. According to her, the interaction with ChatGPT lacked the comforting warmth of human interaction and love. The loneliness she felt from the absence of her father was so deep that ChatGPT could not fill it. When we asked how to change ChatGPT so that its feedback is less mechanical and more human-like, she reflected on her father’s wisdom. She expressed a longing to hear his voice once more and suggested that perhaps AI could emulate her father’s advice-giving style and voice to bridge the gap and feel loved.

4.2.1.3. Generic Responses and Robot-like Language

Language use was excessively mechanical and lacked human-like qualities. Zahra, who is a teacher, complained about the mechanical conversations of ChatGPT that may restrict its usefulness in providing emotional support to users. It is reflected in the comment below:

“My inquiries into numerous areas revealed the bot’s exceptional capacities, but when it comes to emotional support, its language use, on the other hand, is extremely mechanical and lacks human-like features. I’ve observed that when I read the bot’s replies, they are too long and generic.”

One of the frustrating aspects of using ChatGPT is that it tends to provide excessively long responses that might not be pleasing for all when not in the right mood. Diana, a draft person in an architecture firm, shared her experience when she was interested in interacting with the bot. She was not in the right mood and typed, “I am tired”. The lengthy and generic response of ChatGPT was considered dissatisfactory and discouraged her from further interaction.

I just wrote I am tired. ChatGPT replied: I understand that feeling tired can make it difficult to get out of bed and go to work. If possible, try to give yourself a few more minutes to rest, but eventually, it’s important to get up and start your day. If this tiredness persists, it might be worth considering if there are any underlying factors affecting your sleep or energy levels that you should address. It was too long, too mechanical. When you are feeling down and turn to ChatGPT for support, these lengthy responses can make you feel even worse than those generic internet-generated answers. But when you talk with a friend who knows you and sees you, she might reply: Hi, why? What happened to you? You are tired. Do you want a Mango shake? It is what your friend might answer. When you are with a friend in person who has a better understanding of your character, she might offer a few words of encouragement, suggest going for a walk in the fresh air, or simply give you a comforting hug while assuring you that everything will be all right.

There are several points to be understood from the above conversation. First, Diana was searching for a quick, concise answer, but the response from ChatGPT was considered long. It is possible she was not in the right mood for reading long texts when feeling tired. This was a significant reason she found ChatGPT as an AI companion somewhat off-putting. Secondly, Diana compared the generic response received from ChatGPT with a conversation and the accompany of a friend. She found interaction with a human being and close friend comparable to chatting with ChatGPT.

Several other individuals also believed the software lacks customization and cannot give personalized replies to individuals based on their unique requirements and preferences. In the interview, Fatima reflected on her lack of interest in interacting with ChatGPT for emotional support.

“I guess in the current version, the software lacks personalization and gives the same answers to all people around the world. I don’t feel good after long chatting with a bot and sharing my feelings.”

This might suggest a lack of awareness or sensitivity to users’ various requirements and experiences, as well as a need for the program to add more personalized features to improve user engagement and happiness. The dissatisfaction with the automated responses is also reflected in her description of her experience.

One of the other participants reported a similar experience. She revealed that when she feels bored, she instinctively grabs her phone and starts scrolling through Instagram to get a quick dopamine fix. However, one day, when she was also feeling a bit lonely, she thought about starting a chat with ChatGPT. But she quickly halted the conversation because of how ChatGPT responded. It made her realize that she was essentially talking to a robot and not a human. This highlights that the problem is not just the robotic interface itself, but also the quality of responses it provides, which can be a significant limitation when using it as a companion tool.

“It has happened that I browse Instagram when I am bored. Once, I tried to chat with the bot. But I stopped it in the first few moments. If your feelings persist or worsen, consider seeking the help of a mental health professional who can provide guidance and support tailored to your specific situation.”

Zahra, a research assistant and mother of a five-year-old child, stated that during stressful moments away from her family, she turned to ChatGPT as a source of comfort. However, over time, she made a deliberate decision to reduce her reliance on ChatGPT. When asked why she chose to stop using it, she explained that she preferred social media platforms like Reddit. On these platforms, she received diverse responses from various strangers, which resonated more deeply with her needs than simply consuming AI-generated content.

“Upon reflection, it seems that one of the reasons my use of ChatGPT dwindled is that I enjoy reading first-hand experiences and advice from strangers on the internet. This human touch and anecdotal evidence are some things that I didn’t find on ChatGPT.”

The lack of human touch in generic conversations seemed to make some people stay away from bots, preferring reading rather than seeking other sources of comfort in a virtual environment.

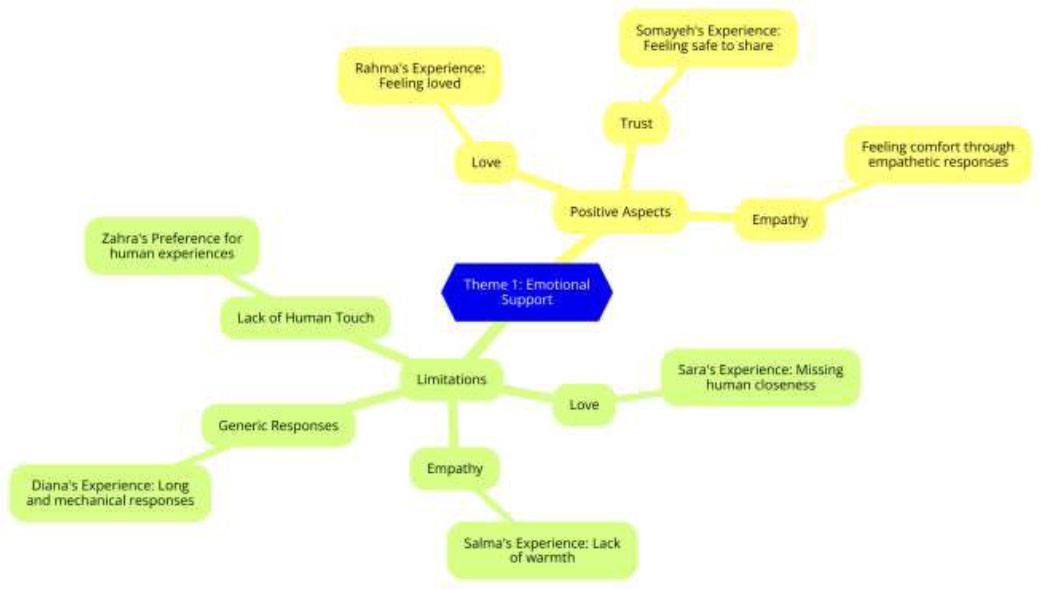

Interactions with ChatGPT were not as efficient as real-life interactions for female migrants when it came to emotional support (Fig. 2). Participants preferred human connection to AI interactions because ChatGPT’s responses were perceived to be robotic and devoid of human warmth. However, they appreciated the capability of AI in mimicking human-like qualities (e.g., a deceased father’s voice) and giving advice. Furthermore, ChatGPT was used by introverted individuals for free, authentic expression. While the participants highlighted the importance of privacy and confidentiality in AI interactions, they considered ChatGPT to be a safe space for sharing personal thoughts and feelings.

4.3. Theme 2: Instrumental Support

Instrumental support refers to the provision of tangible support in helping individuals to achieve their professional or personal goals. This theme explores how users employed ChatGPT for work-related tasks, time management, and financial improvement.

Positive aspects and limitations of ChatGPT in emotional support.

4.3.1. Instrumental Support Positive Aspects

4.3.1.1. Support in Individual or Professional Practice

Some women benefited from ChatGPT for work-related matters, such as writing an email or doing quick research on a topic.

“I only used ChatGPT to write my emails or texts to sound more professional, and maybe I needed to know about some work-related things. I would also use ChatGPT to do some quick research.”

In one of our discussions, we had the pleasure of speaking with Sahar, a dedicated lecturer, researcher, and AI enthusiast. Her profound understanding of AI has led her to view it as a modern revolution comparable in significance to the Industrial Revolution of the 19th century. Over the past five months, she has turned to ChatGPT for both professional and personal reasons. In her personal life, she primarily uses ChatGPT to assist with her daughter, seeking quick advice on how to keep her engaged and entertained daily, finding meal ideas, and even organizing her cluttered thoughts.

“As a mother who has to raise her child far away from the family, and is experiencing motherhood for the first time. I always have lots of questions in mind. Whenever I feel like I’m making a mistake in my parenting style or feel guilty about my performance towards my daughter, several times I've used a chatbot to express my feelings and get some comfort when none of my family members were available at the moment.”

4.3.1.2. Time

The availability of ChatGPT during all hours of the day, the weekends, or holidays was considered a positive and interesting aspect of using the AI for several respondents. This aspect is also important for the migrants as they live in different time zones compared to their home country. The statement below reveals one of the respondents’ positive feedback about the availability of ChatGPT throughout the day and night.

“I loved that it was free and always available to use. On nights when I was stressed and couldn’t sleep, I didn’t have to worry about finding or waking up my friends or family to talk to, but rather just opened my phone to use ChatGPT to share my thoughts and to de-stress.”

4.3.1.3. Financial improvement

Sana, a business owner, mentioned how subscribing to ChatGPT has been super beneficial to her, ever since she decided to quit her job and start her own business. As she is a solopreneur, she constantly has to deal with everything from the admin job to understanding case studies to actually providing solutions. She stated that having ChatGPT has been a great help, and it helps get all the jobs done quickly with accurate solutions.

“I recently started my own company. It was a bit scary as it was something that wasn’t exactly in my field of work, but was something I had learned and truly enjoyed from my previous job. But when there were queries or case studies that I had to understand for the job, I used ChatGPT to make sure the information or advice I was giving to my clients was accurate, and in the meantime, I was also learning more about how I could venture into growing my business and making a more steady income from it.”

4.3.1.4. Therapy and Psychological Consultations

Some respondents shed light on the importance of ChatGPT when seeking therapy or were finding ways to share and talk about their problems to de-stress. Thina shared her experience of using ChatGPT for therapy and found it effective.

“Therapy is so expensive and not so pocket-friendly when you require it all the time. I thought about then trying out BetterHelp cause it was cheaper than going to my local therapist, and it was always readily available. But I didn’t earn enough and couldn’t afford to spend all my savings on therapy, so I had to sign out of it. Everyone was speaking about this new phenomenon called ChatGPT. I was super curious to see what it could do. I ended up using ChatGPT as an emotional support tool cause it was free and always available.”

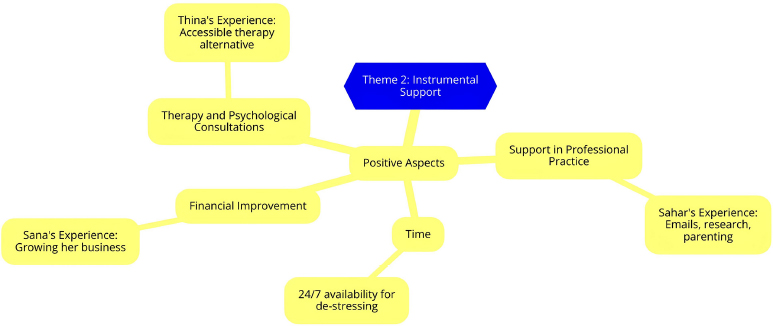

Tina’s experience highlights that the high cost of traditional therapy can be a significant financial barrier for migrants, often discouraging consistent attendance at consultation sessions. In search of alternatives, she sought help from a local therapist due to better availability and lower cost but still found therapy difficult to afford. Her experience with ChatGPT as an accessible source of mental health support was positive, as it met her needs without imposing a financial burden. Fig. (3) illustrates the positive aspects of ChatGPT in providing instrumental support.

4.4. Theme 3: Informational Support

Informational support involves providing the required information and overcoming personal or work-related difficulties. This theme reveals ChatGPT's potential in providing information for career transition and skill development, balancing work and personal life, as well as health and medications.

4.4.1. Informational Support: Positive Aspects

4.4.1.1. Career Transition and Skills Development

Informational support was reported as one of the most pleasing aspects of ChatGPT. After dedicating 11 years to being a stay-at-home mom, Tiffany, the Indian participant, made the bold decision to rejoin the workforce. As ChatGPT gained widespread attention, she became eager to explore its potential benefits in re-entering the job market from scratch.

“When I decided to rejoin the workforce, I felt overwhelmed. Starting from scratch was daunting, and I didn’t know where to begin. That’s when I turned to ChatGPT to explore potential career fields for freshers. I learned about the necessary skills for these roles and based on that, I enrolled in online courses. I also used ChatGPT to craft my CV and personal statements, ensuring that I could still make a strong impression in the job market as a fresher. It was a valuable resource in helping me navigate this transition.”

Positive aspects of ChatGPT for instrumental support.

The above statement reveals the strong support of ChatGPT in the provision of information for decision-making and starting a new path in the life of female migrants. She skillfully acquired new competencies and utilized ChatGPT to craft her resumes and personal statements for job applications. It seems the bot also helped Tiffany overcome some barriers that kept her away from working after over a decade.

4.4.1.2. Balancing Work and Personal Life

Working mothers often struggle to balance work and personal life, managing the responsibility of raising children even if they are not solely responsible for running the household. Samiyah, a university lecturer who recently moved to one of the Gulf countries, shared that she has been using ChatGPT for both work-related and personal support over the past five months.

“As a working mom, my life is always very busy. The stress can mount up quickly because, you see, we working women face a double challenge of managing both our homes and our jobs. There are days when I turn to ChatGPT to help me untangle the chaos in my mind. Picture this: I have to cook, shop, clean, do the laundry, and get ready for work all in one day. It’s a lot to handle. So, I input all these tasks into ChatGPT, and it kindly sorts them out, telling me what should take top priority and even offering tips on how to tackle them efficiently. It truly helps me in getting my feelings and tasks in order.”

Initially, Samiyah discussed the challenges she faces in balancing her personal life and work-related tasks. She relies on ChatGPT for creating lectures, learning new skills, and brainstorming fresh ideas. In her personal life, she primarily uses ChatGPT to assist with her daughter—seeking quick advice on how to keep her busy and entertained daily, discovering new kid-friendly meal ideas, and organizing her muddled thoughts. This support has helped her better manage her day-to-day responsibilities, especially during the hectic pace of life as a working mother.

4.4.1.3. Health and Medications

Some interviewees found ChatGPT to be a quick and accessible source of information about health and medications. For example, Sanaa recently received the emotionally challenging news of her mother’s cancer and found herself struggling to cope with it emotionally.

“When I found out that my mother had cancer, it was devastating. It was hard on all of us. None of us knew about her cancer and had so many questions about it. Honestly, I’m so glad I knew about ChatGPT, as it helped answer all my worries. I was able to read and understand the condition in detail.”

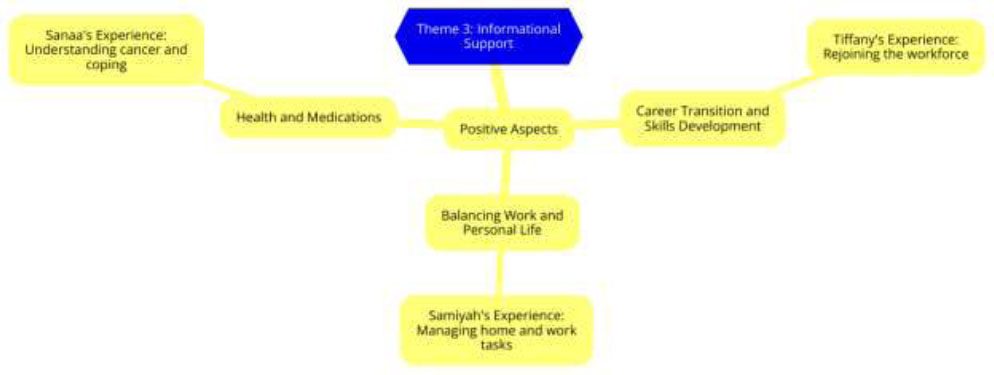

ChatGPT played an important role during this time, as it helped her understand her mother’s condition far better than surfing the internet randomly. It gave her all the information she needed in seconds. Fig. (4) presents the positive aspects of ChatGPT for informational support.

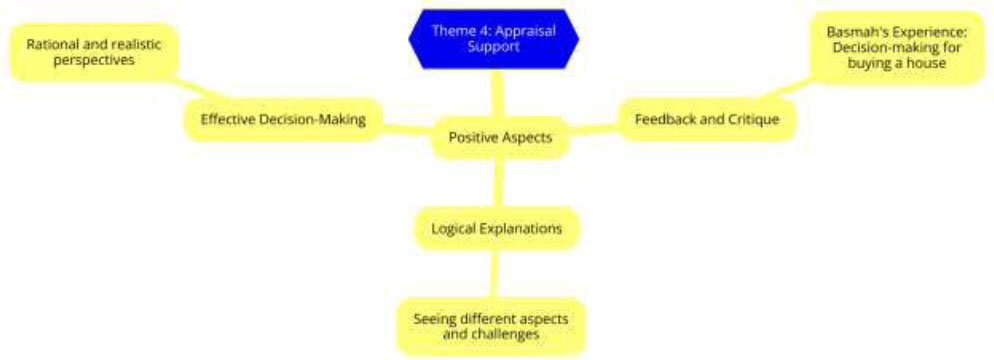

4.5. Theme 4: Appraisal Support

Appraisal support is about providing feedback on different aspects of human life and evaluating them. This theme explores how logical explanations offered by ChatGPT help individuals to make decisions effectively.

4.5.1. Theme 4.1: Appraisal Support Positive Aspects

Some of the respondents used ChatGPT to provide feedback or critique during the decision-making process. Basmah is an academician and shared her experience of working with ChatGPT in decision-making.

Positive aspects of ChatGPT for informational support.

Positive aspects of ChatGPT for appraisal support.

“I requested support for buying a house in Dubai. I gave a prompt to ChatGPT and asked it to act as a very professional state consultant. I explained that I was an Egyptian professor in Dubai and asked if investing and buying a house in a specific area was rational. The organized, step-by-step, and detailed explanation was very interesting. Even though, in general, I found ChatGPT helps you see different aspects of an issue from different angles, it does not give you a solid answer. Some of its comments are very interesting and logical, though.”

Basmah was amazed by the attempts made by ChatGPT to understand her questions and justify its answers. Some interviewees reported that the detailed, rational, and realistic ideas offered by ChatGPT for their potential challenges reflect its strong point. Fig. (5) presents the positive aspects of ChatGPT for appraisal support.

5. DISCUSSION

This study aimed to address a gap in the ChatGPT and health literature by focusing on working female migrants' perceptions of ChatGPT 3.5’s potential in providing social support. It offers insights into the various types of social support that AI can provide as an alternative or complement to human support, particularly for this vulnerable group, who often face social isolation and limited access to in-person support. The study employed a qualitative framework analysis methodology, conducting in-depth interviews with 64 educated female migrants from across the Gulf region, including Bahrain, Kuwait, Oman, Qatar, Saudi Arabia, and the UAE.

The results showed that ChatGPT 3.5 had the potential to provide valuable emotional support to female migrants in the Gulf region in three categories: love, trust, and empathy. In this research, only one of the respondents linked the availability of the AI bot with being loved. Similarly, Wong and Kim found that people assign human-like attributes, such as gender, to the chatbots [60]. Further studies might delve into a deeper understanding of AI and human interaction and its effect on individual unique experiences. Interestingly, ChatGPT 3.5 managed to gain the trust of female migrants by providing a private space and using empathetic language. This result echoes the power of ChatGPT in providing emotional support. Similarly, the results of previous studies highlight the superiority of ChatGPT in comparison with humans in terms of emotional awareness [61].

ChatGPT was perceived to fail in maintaining emotional support as it is devoid of human warmth. Similarly, another study also highlighted the absence of tailored emotional support as one of the major drawbacks of bots [62]. Aligned with the results of this study, one study found that ChatGPT was capable of providing basic emotional support for various users [63]. This means that ChatGPT still cannot take the place of humans. This can be justified by the absence of human-like features.

Participants in this study emphasized the importance of instrumental support in facilitating their work and skill development. They appreciated the unlimited access to relevant advice on various issues, including language learning, email communication, and research. Additionally, they noted that prompt feedback and accurate health information contributed significantly to their well-being. Both tailored guidance and reliable yet immediate data provided by ChatGPT helped female migrants better balance their professional and personal lives. Practical strategies enabled them to manage tasks effectively and prioritize responsibilities.

These findings align with previous research demonstrating the transformative impact of chatbots in enhancing patient education and health-related decision-making , as well as the versatility of ChatGPT’s informational support in helping individuals navigate life challenges [64-67]. The results also resonate with another study , which highlighted how safety advice improved migrant workers’ performance. Moreover, the study showed that knowledge management facilitated decision-making [49, 64, 68]. These findings support a previous study , which found that ChatGPT’s tailored solutions increased customer satisfaction and that contextualized AI integration can save time by prioritizing important tasks [69].

In terms of appraisal support, participants added that ChatGPT helped them get engaged with financial decision-making at a very low price. This result is in agreement with prior studies that reported how chatbots facilitated case management for immigrants. The results underscore the fact that ChatGPT's revolutionary effect on the financial sector makes financial evaluation easier by helping individuals gain access to financial acumen and sophisticated analysis [53].

6. LIMITATIONS

This study has some limitations. First, as this study employs a qualitative method to focus on immigrant women in the Gulf, the samples are homogeneous to gain an in-depth understanding of female immigrants. The use of LinkedIn to recruit participants purposively probably led to a sample that was primarily made up of working women. Such purposive sampling improves the internal validity but limits generalizability. Future quantitative studies need to include participants with a variety of demographics to shed better light on the way chatbots can cater to the needs of immigrants from different backgrounds. Future studies can focus on different educational backgrounds, language skills beyond English, and socioeconomic backgrounds. Though the timeframe of two weeks was limited for a qualitative study, we focused on capturing in-depth data from 60 female migrants within the available period. As having a consistent and meaningful experience was one of the criteria of the study, we managed to identify cases that provided us with rich and in-depth data.

Another limitation of our study is the use of a predefined analytical framework based on dimensions of perceived social support. While this structure guided our analysis, it may have constrained the emergence of new, unforeseen themes, potentially overlooking other meaningful aspects of social support as perceived by participants. Future research could employ more flexible, inductive data analysis methods to capture a broader range of experiences.

To better understand how factors like education, language, and socioeconomic background influence perceptions of AI as a social support tool, future studies should include more diverse participant profiles. Addressing these limitations can provide deeper insights into AI’s role in social support and contribute to the development of culturally sensitive and empathetic AI technologies.

CONCLUSION

The study concluded that ChatGPT 3.5 offered valuable emotional, instrumental, informational, and appraisal support. Some drawbacks of ChatGPT included a lack of human warmth, excessively generic responses, and an inability to mimic the emotional depth of human interactions. Sometimes, its unwillingness to offer firm, definitive answers left users wanting more information. Furthermore, its reliance on logical thinking rather than contextual and emotional sensitivity limited its efficacy in highly customized decision-making situations. Notwithstanding these limitations, ChatGPT may enhance conventional support systems, particularly in situations where accessibility, comfort, and privacy are crucial.

IMPLICATIONS OF THE STUDY

The findings of this study carry considerable practical importance for AI developers, policymakers, and stakeholders in social support systems. The results can guide the development of generative AI like ChatGPT to better address the psychological and emotional needs of migrants. By embedding feedback loops that enable AI to learn and adapt more quickly to specific contexts, AI could achieve both efficiency and empathy, especially for vulnerable populations, such as female migrants. Feedback helps stakeholders save time caused by analysis failure and increases operational workflows. Additionally, the results can assist stakeholders in developing more effective instructional strategies to facilitate the integration of female workers. To address the limitations of ChatGPT, stakeholders need to involve migrants in the design process to optimize performance by considering users’ needs based on their cultural backgrounds. Moreover, designers should train chatbots with culture- and context-specific data to avoid generic responses and provide more meaningful social support.

AUTHORS’ CONTRIBUTIONS

The authors confirm their contributions to the paper as follows: F.K.: Conceptualized the study and prepared the original draft of the manuscript; C.C.C.: Supervised the research and contributed to drafting and refining the manuscript; Q.U.I.: Responsible for developing the methodology and data analysis framework; Z.K.K.: Contributed to writing and editing the manuscript. All authors reviewed the results and approved the final version of the manuscript.

ETHICS APPROVAL AND CONSENT TO PARTICIPATE

The ethical approval for conducting the research was obtained from Dhofar University, Oman (DU-AY-24-25-QUFS-005).

HUMAN AND ANIMAL RIGHTS

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or research committee and with the 1975 Declaration of Helsinki, as revised in 2013.

CONSENT FOR PUBLICATION

All participants agreed with informed consent before participation in the study, and they were told that their participation was voluntary and withdrawal was allowed at any time without any implications.

AVAILABILITY OF DATA AND MATERIALS

The data supporting the findings of the article will be available from the corresponding author [F.K] upon reasonable request.

ACKNOWLEDGEMENTS

Declared none.